Radeon Instinct Hardware: Polaris, Fiji, Vega - AMD Announces Radeon Instinct: GPU Accelerators for Deep Learning, Coming In 2017

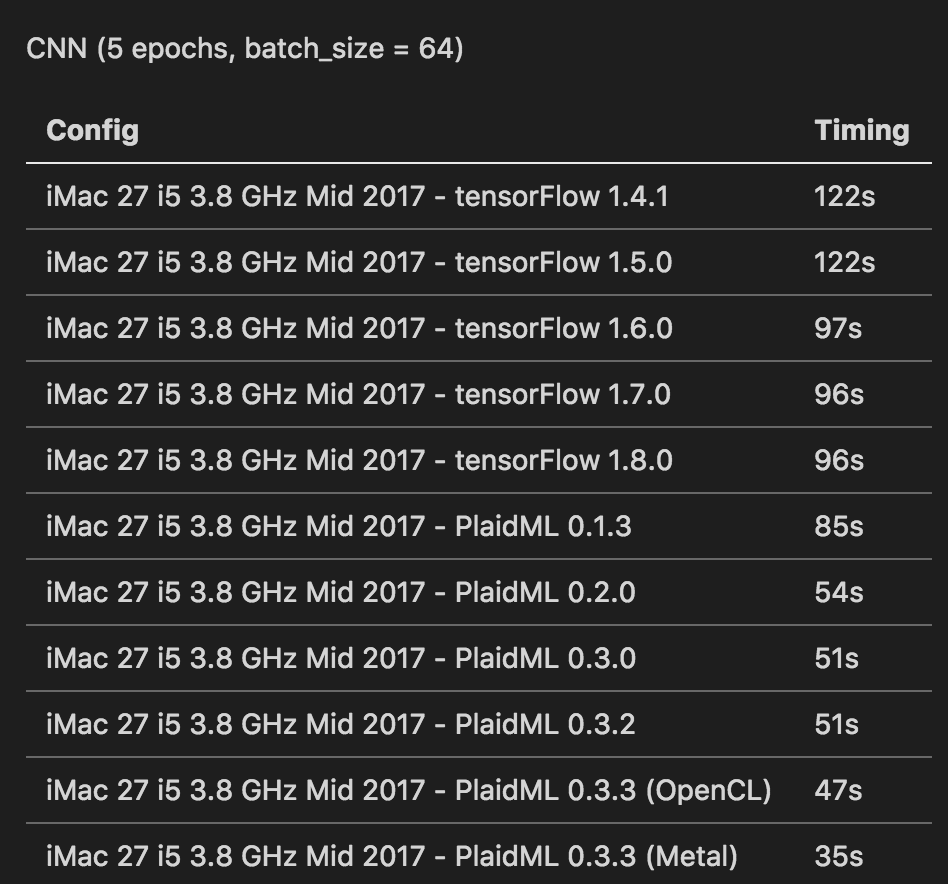

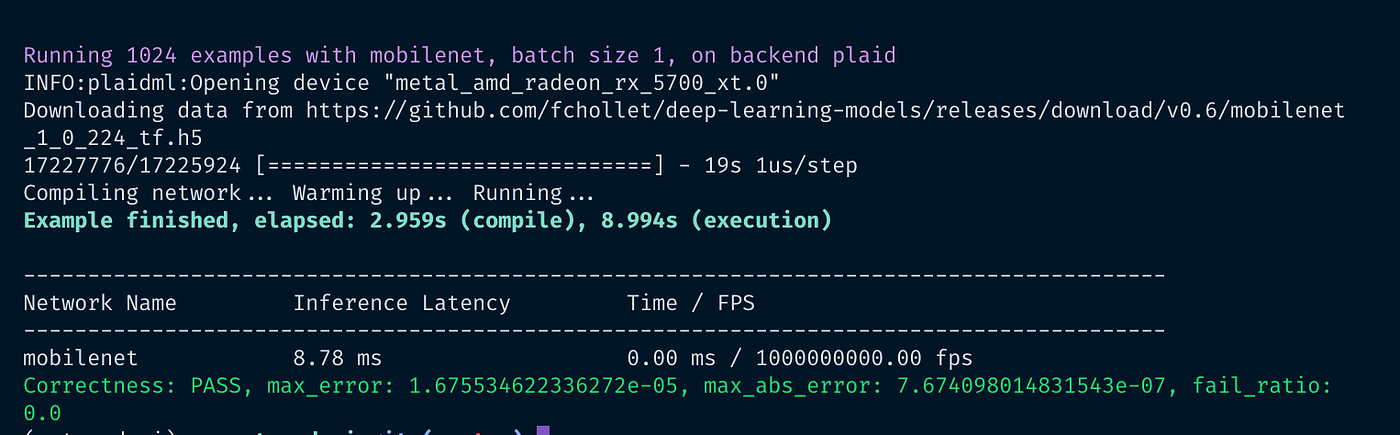

AMD & Microsoft Collaborate To Bring TensorFlow-DirectML To Life, Up To 4.4x Improvement on RDNA 2 GPUs

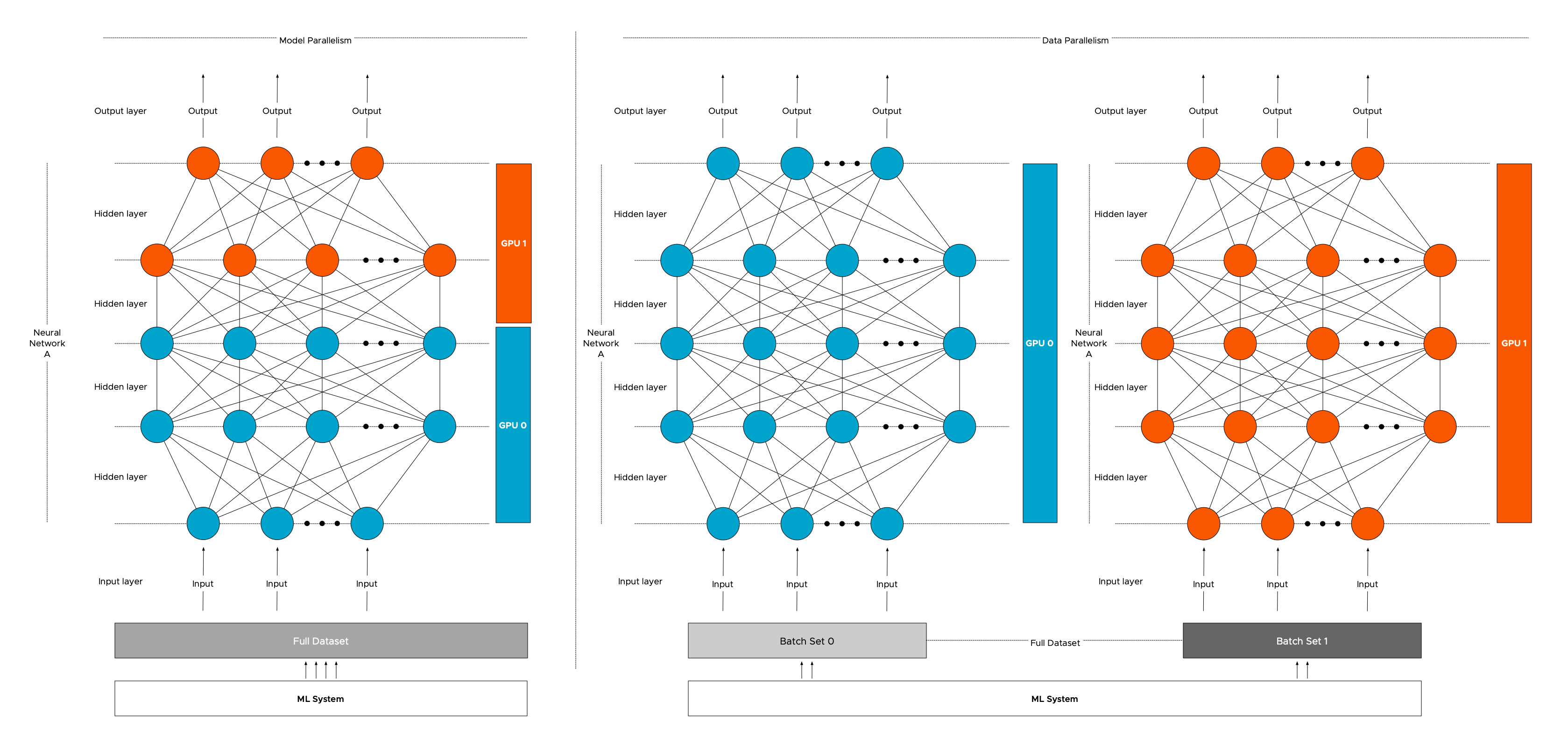

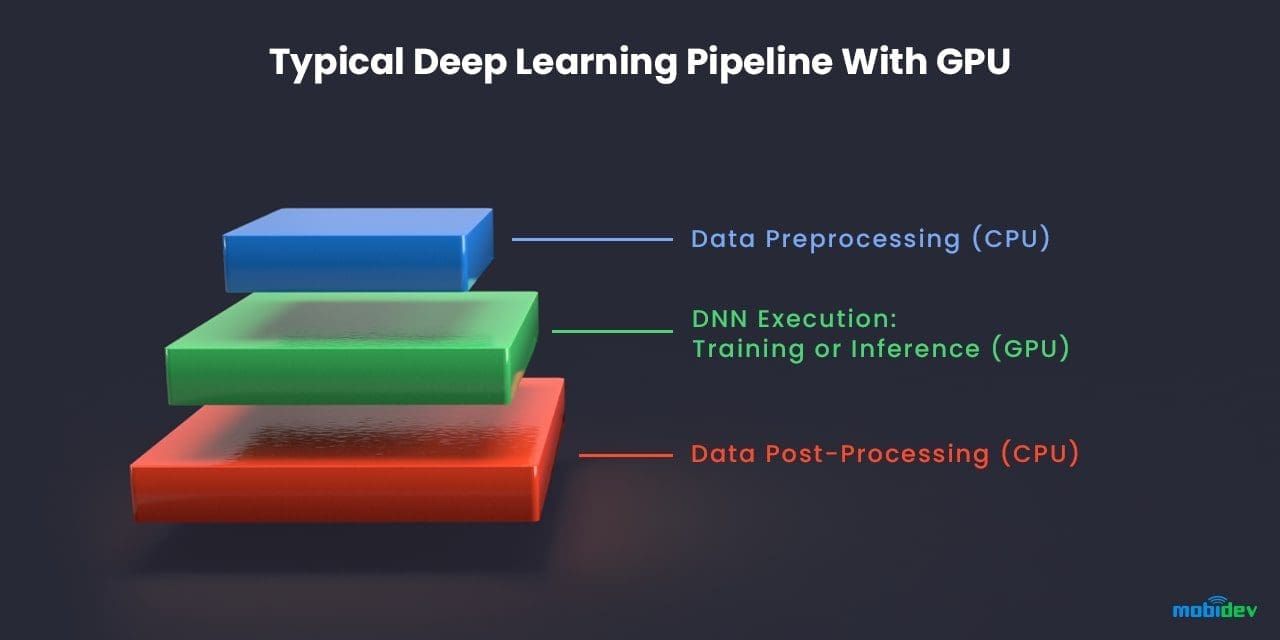

Software, Servers, & Closing Thoughts - AMD Announces Radeon Instinct: GPU Accelerators for Deep Learning, Coming In 2017

/filters:no_upscale()/news/2021/12/amd-deep-learning-accelerator/en/resources/1Fig-1-AMD-ROCm-50-deep-learning-and-HPC-stack-components-1638458418712.jpg)